Special-SAM – Foundation Model Fine-Tuning

Tech Stack

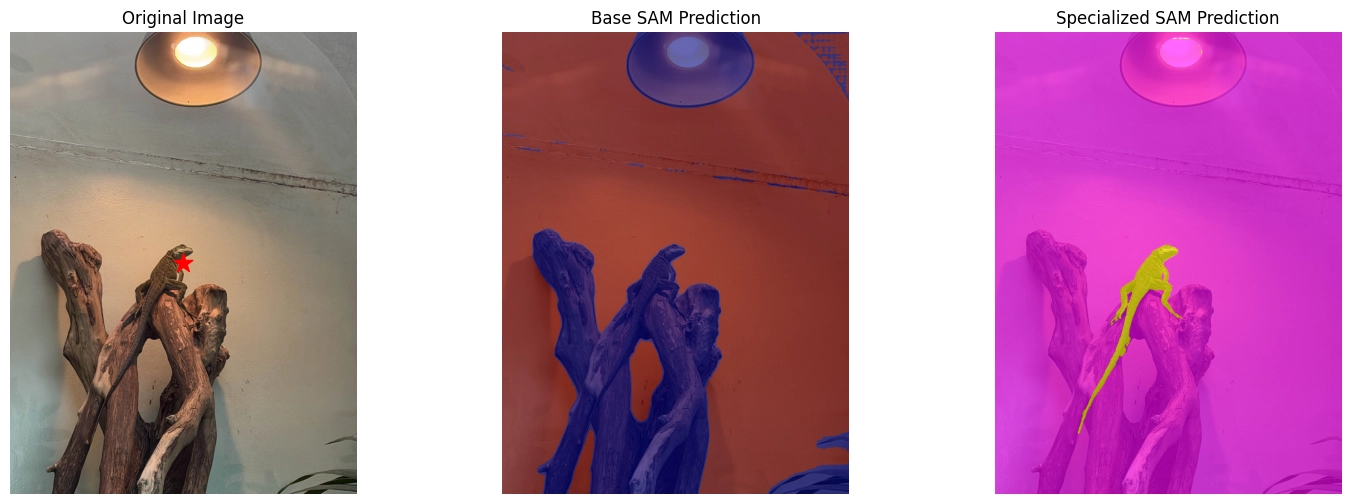

Key Results

About This Project

Special-SAM is a research project focused on fine-tuning Meta's Segment Anything Model (SAM) for specialized medical and scientific image segmentation tasks. The project demonstrates that foundation models can be effectively adapted to domain-specific applications with targeted fine-tuning strategies.

The ViT-H backbone (636M parameters) was fine-tuned on a carefully curated dataset of 12,000 augmented images. A combined loss function using Binary Cross-Entropy and Dice Loss was implemented to handle class imbalance common in segmentation tasks. The multi-prompt training strategy allowed the model to generalize across different input modalities.

Key results include an improvement in mean Intersection over Union (mIoU) from 47.52% to 66.35%, representing an 18.83-point gain. The training process achieved a 25.4% loss reduction with stable convergence, demonstrating the effectiveness of the fine-tuning approach.